Improving the customer and user experience

We offer a turnkey service –

-

Competitor and data analysis

-

Recruitment and administration of research subjects

-

Investigating user requirements

-

Plotting user journeys and service touch points

-

Feature testing

-

Usability testing

“What people say, what people do, and what they say they do are entirely different things.”

Margaret Mead

And we’re experienced with remote research and testing.

Remote usability testing

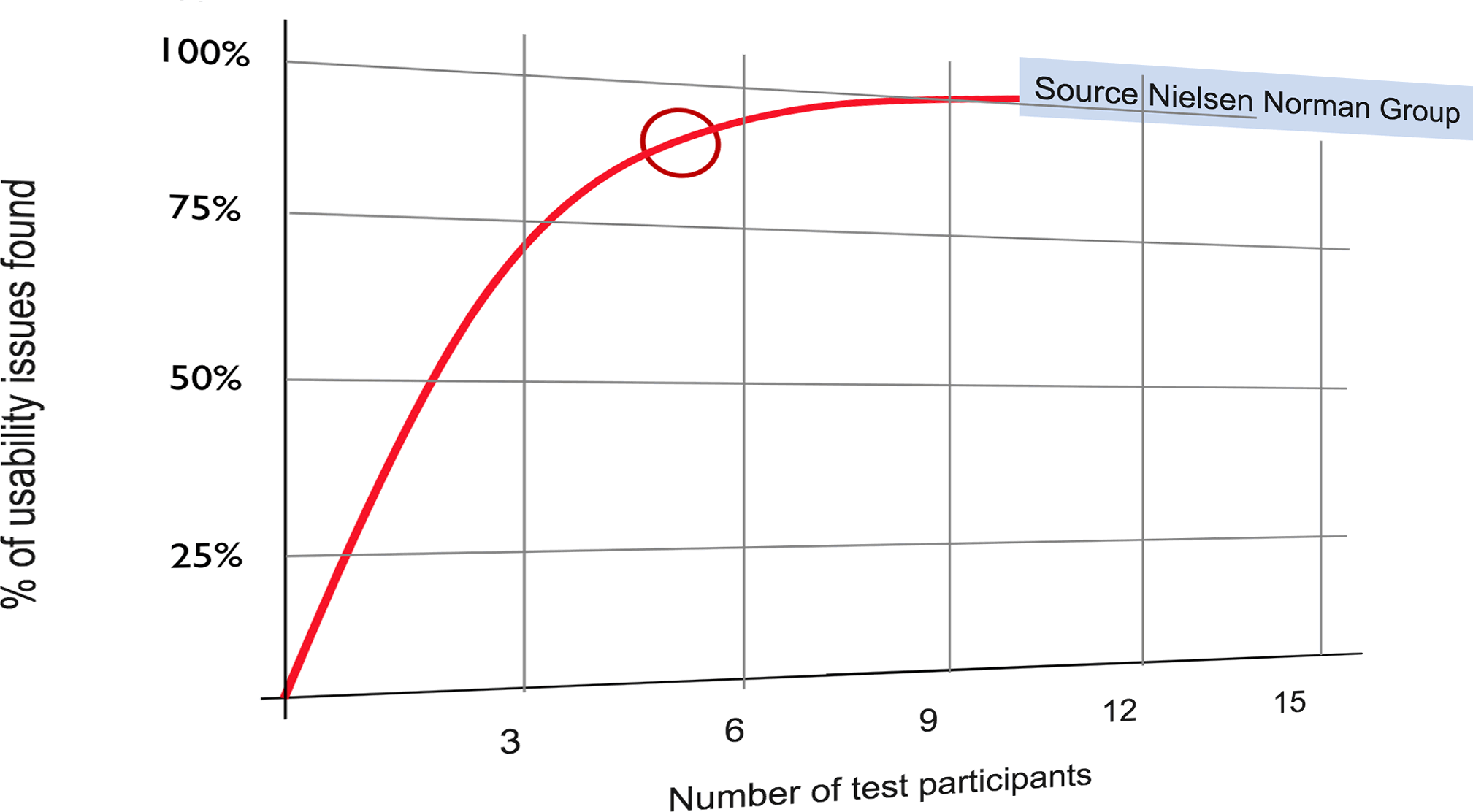

“A day of usability testing with 5 people can uncover 80% of a site’s usability issues.”

Nielsen

“A well-designed user interface can increase website conversion by 200%.”